Should AI Progress Speed Up, Slow Down, or Stay the Same?

I don't know, and you don't, either.

Goals of the post

I’m going to try to convince you of two things: 1. That you don’t have (and no one has) a super informed opinion on the answer to the question in the title, and 2. That it would nevertheless be prudent for society to “install brakes” on AI progress in case they turn out to be needed.

Starting point: my views on the current pace

I’ll very briefly recap my perspective on how fast AI progress is before returning to the question of whether it should be faster, slower, or the same.

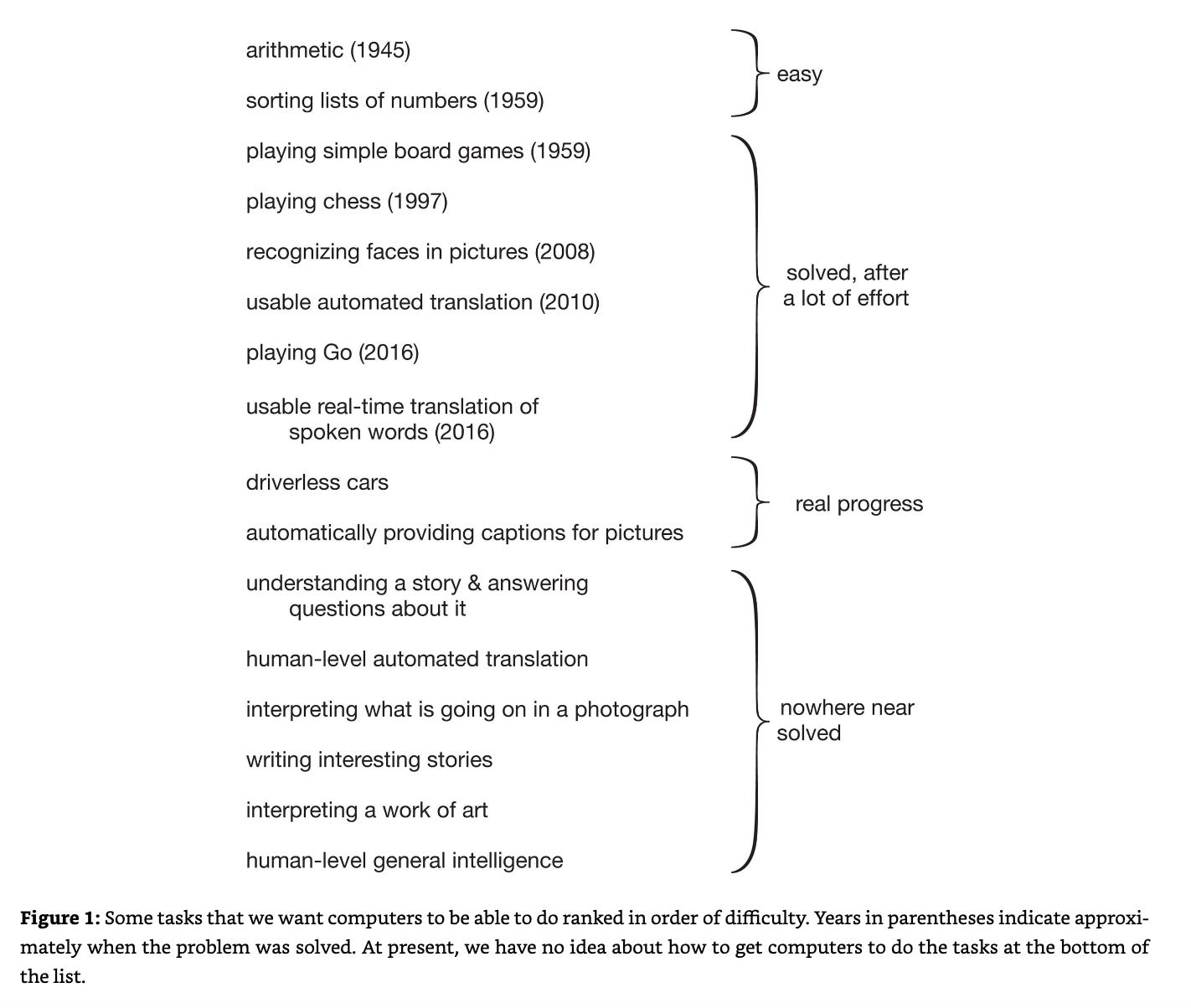

My view is that AI progress is currently very fast. Consider this figure from a 2021 book by an AI professor, which did not age well. E.g., “understanding a story & answering questions about it” and “interpreting what is going on in a photograph” were not completely solved but they were definitely advanced very substantially just a few months/years later. My point is not to dunk on this one example but just that serious people often get things wildly wrong on AI, and while there are certainly some cases of people being overly optimistic about AI progress, on balance I think most people would agree that betting against deep learning has been an unsuccessful strategy this decadee.

Consider also this graph of the increasingly rapid saturation of new AI benchmarks:

AI systems are starting to outperform experts on test questions in areas like physics, chemistry, and coding. Consider GPQA, or Google-Proof Question Answering, for example. This benchmark is basically brand new, and is getting crushed now – AI systems can do better than most experts at solving isolated, graduate-level tasks that are not Google-able, even when the experts are given half an hour to solve the problem.

The very latest progress has been driven by the transition to a new paradigm of AI systems thinking things through before giving an answer, in what’s called a “chain of thought.” I recommend checking out this whole post (from which I took the image above), including the specific chain of thought examples, to get a sense of how wild this is. Be sure to click on the parts that say “Thought for X seconds” to see the full thing.

GPQA is an example of isolated, short-time-scale tasks, and AI systems can’t yet outperform experts on “long-horizon” tasks that take many hours/days/weeks/years, but companies are actively pushing on this and I’m aware of no good reason why they won’t succeed this decade. These advances are starting to be paired with robotics, which will take longer to solve than tasks that can be done remotely but again there’s no obvious in-principle blocker here, and really smart AI systems will undoubtedly help engineers at robotics companies move faster.

There’s much more to say/discuss here but I’m just briefly laying my cards on the table.

Why the title of the post is a tricky question

Besides the pace of AI progress being controversial (though less so over time in my view), the question is vague, in that you could try to speed up or slow down AI progress at various scales – e.g., at the level of an individual company, at the level of a country or set of countries, or globally. And you can also draw various distinctions between different types of AI progress, and make different claims about different types See, e.g., the distinction drawn in this article:

The rapid advancement of artificial intelligence can be viewed along two axes: vertical and horizontal. The vertical axis refers to the development of bigger and stronger models, which comes with many unknowns and potentially existential risks. The horizontal axis, in contrast, emphasizes the integration of current models into every corner of the economy, and is comparatively low-risk and high-reward.

Below, I specifically focus on vertical scaling/progress at a global scale, though for space reasons I won’t justify this choice, other than to briefly say that vertical scaling is what a lot of people mean by AI progress, and that ultimately I care about global outcomes, so it makes sense to consider AI progress wherever it occurs.

One reason that some people might think this is a tricky question is that they think it’s a litmus test for people’s views on technology in general, which is a whole controversy of its own. That’s not the case, and in fact, there are plenty of people who are generally pro-technology but worried about AI.

The real reason this is a hard question, in my opinion, is that – even if you start from a shared baseline of reasonable ethical premises like “don’t kill everyone” and “more people vs. less people should benefit from technology” and “it’d be good for autocratic countries not to surpass democratic countries ones on AI” -- it’s still hard to resolve the question without clarity on a bunch of related empirical questions, each of which is practically a field unto itself.

One’s answer to the overall ideal pace question might depend on answers to questions like:

Is making very capable AI systems safe going to be super easy, easy, hard, or super hard?

Is China overtaking the US on AI likely or not, and how does this vary with different steps that might be taken to change the pace of AI progress?

Is fast advancement in AI likely to help or hurt efforts to solve other major societal challenges/risks like climate change?

These individual questions are hard on their own, and integrating them into an overall framework to conclude something useful about the ideal pace of AI progress is also hard. There are many more such questions but these give you some flavor of the kinds of questions I think you should ask yourself if you think that the question in the title is straightforward.

Why we need brakes

By brakes, I mean well-specified and well-analyzed technical and policy options for slowing AI progress if it turns out that – based on answers to questions like those above – it makes sense to do so.

We should “install” (design and debate) brakes because the current pace of AI progress is significantly faster than society is able to understand and shape effectively, and that may or may not change soon — the gap could grow much larger, and events could spin out of control. Hopefully policymakers get on top of things, we soon see evidence that safety is easy, etc., but we don’t know that those things will happen, so let’s be prepared for various scenarios. See here for some discussion of the gaps in societal readiness for more advanced AI capabilities that I see (though note that this is such a fast-moving situation that I’d probably write it somewhat differently now).

The idea that the pace of AI progress is pretty concerning is common among regular people, by the way, though views on AI vary a lot by country (e.g., developing countries tend to be more excited about AI, which is a very interesting and important phenomenon I may say more about at some point).

I don’t think brakes as defined above exist, and I think the non-existence of brakes is part of why we see discussion of unrealistic ideas like AI companies pausing unilaterally (this won’t work, at least for very long, due to responsible AI development being a collective action problem). People see the problems, and look around for a solution, and come up with something simple, but policymaking is hard and requires foresight, debate, and serious research.

As part of a paper on computing power as a promising lever for AI policy, some colleagues and I talked about one idea for installing brakes – a “compute reserve,” analogous to a central bank, that would modulate the pace of AI progress based on the answers to questions like those discussed above. This has a bunch of problems/ambiguities, and I’m not saying it’s the right approach, but I do think there should be more discussion of this and related ideas. It’d also be good to see a discussion of various scenarios for taxing AI and the impacts that this would have on AI progress, the distribution of AI’s benefits, etc.

Why not also create a gas pedal?

If there wasn’t one I’d suggest we create it, but there are already various small gas pedals that are being pressed pretty hard. For example, the CHIPS Act put more money into American semiconductor manufacturing; startups, venture capitalists, and big tech companies are constantly trying to increase vertical and horizontal scaling; educational institutions are constantly training AI researchers and engineers; consumers frequently vote for faster progress with their wallets by giving revenue to AI companies; etc. It’s also easier to speed things up unilaterally than it is to slow things unilaterally given the difficult of coordination on a slowdown, hence I think it makes more sense, from an allocation of policy research attention perspective, to focus on the harder problem of slowing.

Why not keep things as-is?

Maybe this is actually the right pace of progress, and that’d be an interesting coincidence, but I haven’t seen a good argument to that effect. If you write one or find one, let me know. In no scenario should we just accept the status quo, even if we conclude that this is the right pace of AI progress specifically — there are many other things to be done, like increasing government expertise on AI, regulating frontier AI safety and security, etc. But I can’t currently rule out that this is roughly the right pace — I just don’t know, and again, don’t think you do, either. Being confident on this seems to require being confident on a bunch of really complex topics.

Conclusion and next steps

Again, my goal here was to argue that 1. That you don’t have (and no one has) a super informed opinion on the answer to the question in the title, and 2. That it would nevertheless be prudent for society to “install brakes” in AI progress in case they turn out to be needed. Hopefully I made some headway on that.

Among other topics that I might explore in the next phase of my career, the pace of progress question looms pretty large currently. It’s related to the “AI grand strategy” cluster of topics I mentioned in this blog post and also very related to the cluster on regulation of frontier AI safety and security (although I don’t think that the connection between regulation and national competitiveness works the way some people think it does). And the current lack of brakes worries me a lot.

If you’re interested in talking more about this topic, please reach out to me here.