Why We Need to Think Bigger in AI Policy (Literally)

More attention is needed to the overall safety and security practices of AI companies, rather than just the properties of individual AI systems.

Introduction

Frontier AI systems — state-of-the-art neural networks and the infrastructure built around them such as input/output filters and access controls — require especially strong governance. They are currently being abused in harmful ways such as assisting with fraud and cyberattacks; they will soon make it much easier to carry out novel biological weapon attacks; and sufficiently capable AI systems could resist human oversight and either seize, or be used to seize, enormous amounts of power. Yesterday’s frontier systems quickly become today’s mundane tools, but at any given time, we should pay particular attention to frontier AI systems.

Urgent action is needed, and we shouldn’t let perfect be the enemy of good. But we need to make sure we’re heading in the right general direction. This post is about one way in which we might be off course.

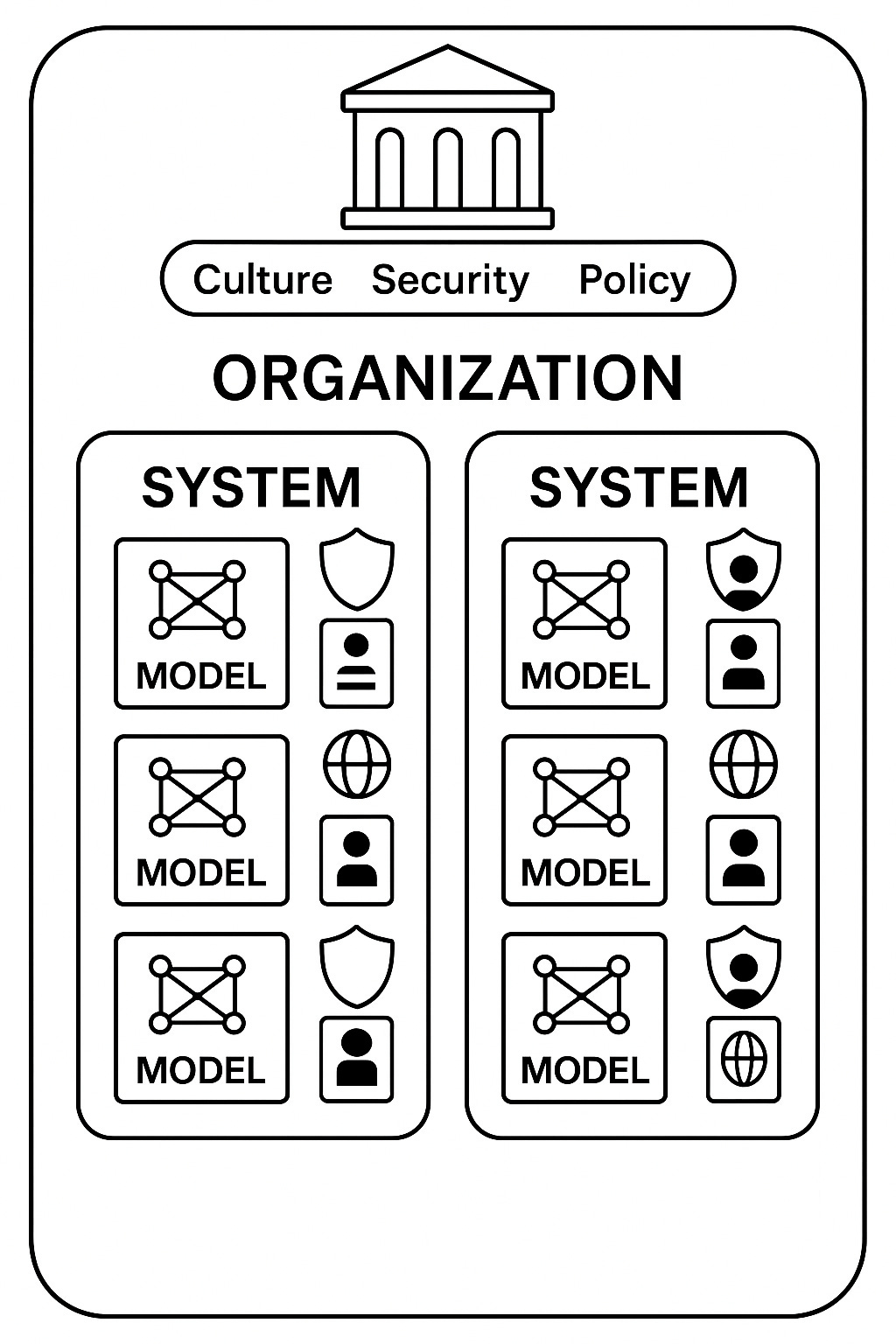

Most (though not all) thinking on AI safety, security, and policy is framed in terms of mitigating the risks of individual AI systems. But we also need to be thinking much more about organization-level governance, which counterintuitively will often be the best way of addressing issues with those individual systems. This is a major difference in framing: less like “Is o3 or Claude 3.7 Sonnet safe?” and more like “Will OpenAI or Anthropic cause catastrophic risks?”. For several reasons I describe below, we need to start asking the latter type of question a lot more, and it is not reducible to the former.

Recapping the model → system transition

Today’s focus on “AI systems” is already an expansion of an earlier framing – “AI models.” The shift from focusing on models (the actual neural networks themselves) to focusing on the larger system that includes those models was an important one. This recognizes the fact that there are many layers to a product like ChatGPT, Claude, or Gemini. There are filters on inputs and outputs, the models use tools (e.g., browsing and code execution), and there are various access controls (e.g., fraud prevention for new accounts and rate limits).

The shift from models to systems was particularly important in order to pave the way for the latest paradigm, in which it matters a lot how much computing power is used to run the model (i.e., how much “test-time compute” is applied), such as by making the model think for longer or running multiple copies in parallel.

Taken from here. How a model is used as part of a larger system, including how much test-time compute is used, matters a lot.

Particularly for “closed models” – in which the model weights are not released openly but instead are accessed via an API or a product – it matters a lot, safety and security-wise, what the system’s features are.

A smaller, narrowly focused model might scan for malicious inputs to a bigger model, or there may be limits on how much you can do with a free “burner” account in order to make it harder to scalably abuse the technology (e.g., to generate large amounts of spam or to carry out cyberattacks). It also matters which “system prompt” is being fed to the model on a given day, telling it how to behave. These are all system-level questions, not model-level questions.

Models matter, and the point about the importance of systems can be overstated. But overall this was a good transition to make, and below I’ll just use the term “system” in a way that includes “pure” models as a special case. But we still need to zoom out further.

Systems are often too small and narrow of a focus

Models and systems are critical to apply safeguards to, but an exclusive focus on them will blind us to many aspects of AI governance. I am not the first to make this general point, which has sometimes been called a “[corporate] entity-based” approach to AI governance, though I haven’t seen a sustained argument for it. This section will only scratch the surface but I hope it gives a rough sense of some reasons why the system-level focus needs to be augmented with an organization-level perspective.

Security is important in order to judge the overall impact of a system, and security is largely an organizational property.

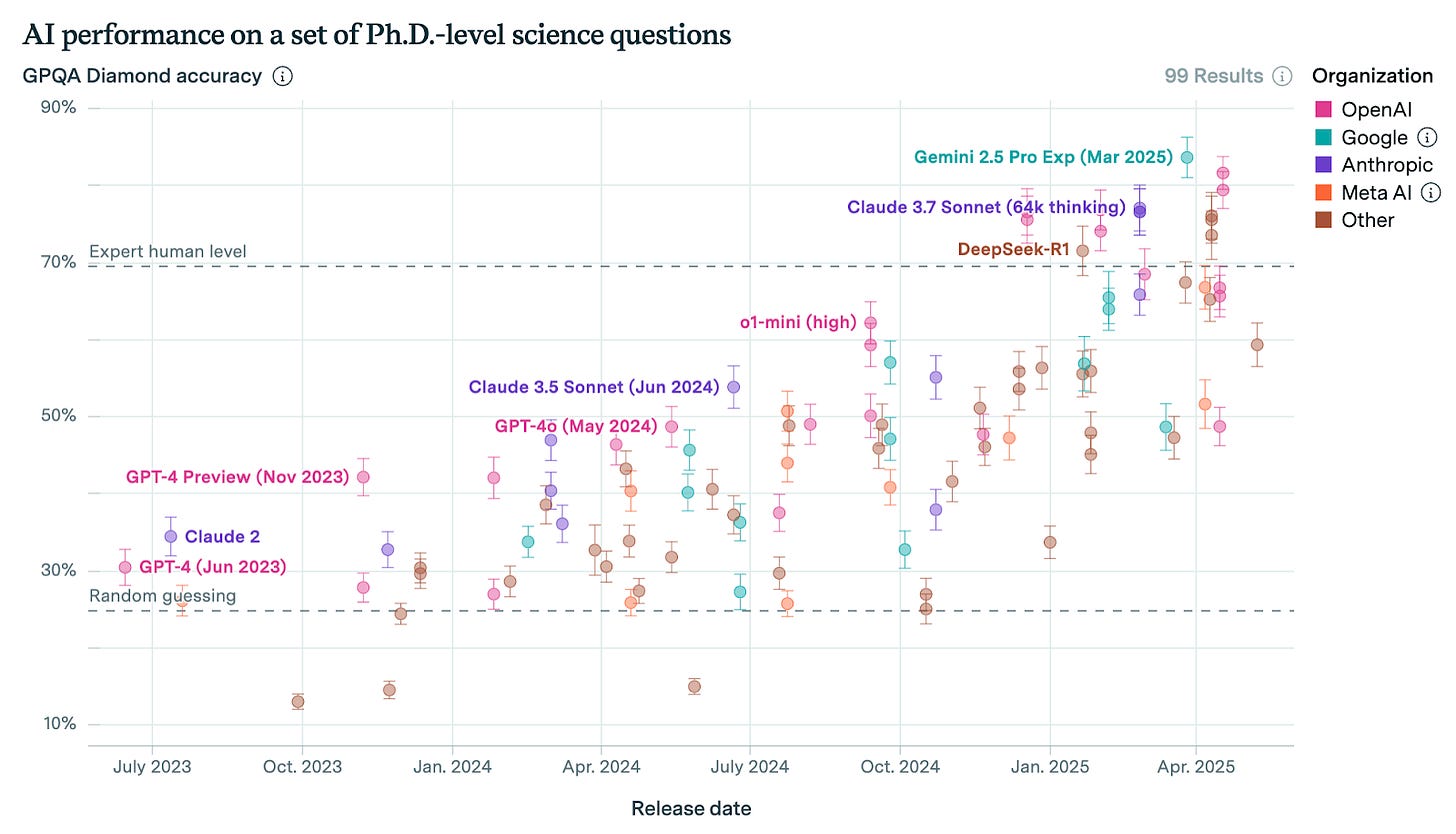

Today, the strongest argument for AI systems being safe is often an “inability argument” – essentially, that the system is too stupid to be able to do anything very dangerous. That kind of argument won’t work much longer, because AI is continuing to progress very quickly, as all the leading companies are saying as clearly as they can without coming off as too alarmist.

Taken from Epoch’s dashboard here.

As we move into a world where inability arguments don’t work anymore, the safety measures we place on systems will become more critical, and so will efforts to prevent those systems from being stolen or tampered with. It’s often reasonable to proactively release model weights despite this meaning that the safeguards can be undone, but there’s no reason to think that this will always be the case – sometimes society will just not yet be pandemic-proof and it will be irresponsible to “democratize” biological weapon creation right away. Indeed, many in the industry are concerned we’re heading towards such a world in the near future, and In order to even have the option of holding back certain dangerous capabilities (versus them being stolen or leaked), you need good security.

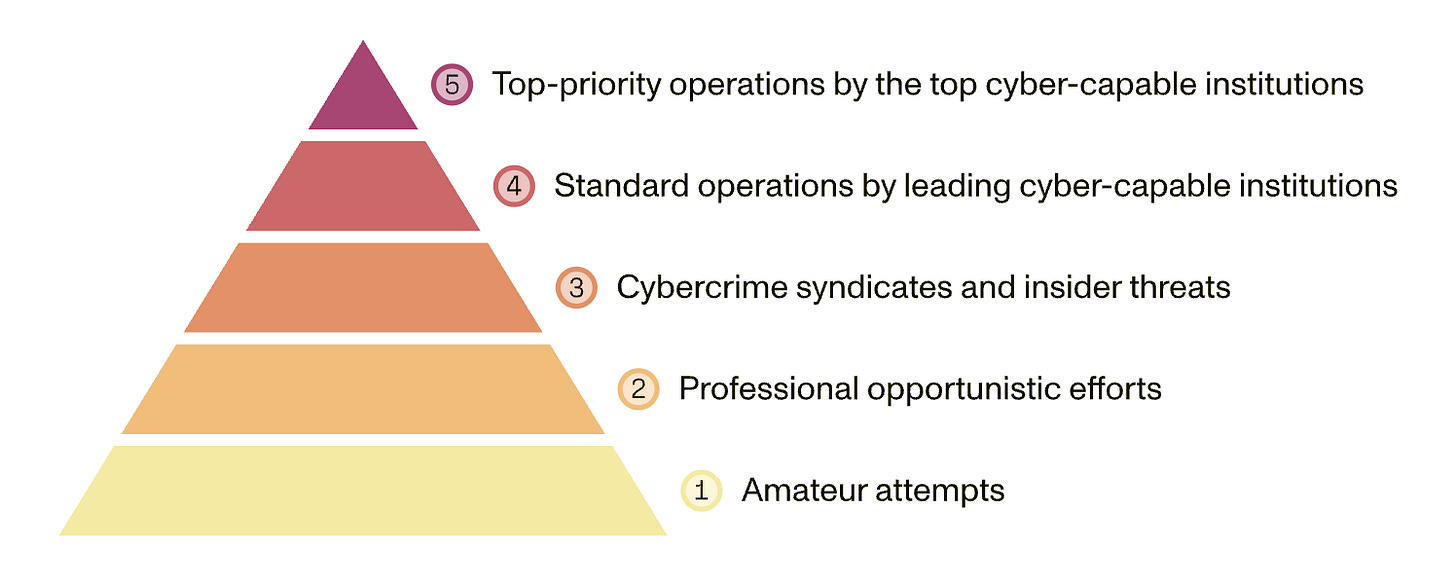

Figure taken from a blog post here, describing a longer analysis by RAND here.

Unfortunately, security isn’t moving as quickly as I and many others would like, but for our purposes here, the key point I want to convey is that security is often best thought of at an organization level, not at a system level. Thus, as external stakeholders evaluate the level of risk that an organization might be imposing on the rest of the world, you can’t focus exclusively on the properties of particular AI systems.

For example, consider whether a company invests in patching its software quickly (even when this is annoying to users), or whether they allow employees to access their work accounts on personal phones – these are organizational questions. These organization level steps will significantly affect how easy it is to (e.g.) spearphish an employee, or otherwise gain access in order to steal model weights or algorithmic insights.

The impact of using an AI system often depends on context, which can only be understood at the organization level.

AI systems are inherently “dual-use” – they can be used for military or civilian purposes, and for offense or defense. That’s just the nature of intelligence, and fortunately, most people aren’t evil, so AI gets used in many beneficial ways. But there are some bad people, and AIs might themselves be bad – and the nature of bad activity is that it’s sometimes subtle, with many small actions that look fine on their own adding up to a larger malicious plan.

In order to understand whether a particular set of “tokens” (outputs from an AI system) are harmful or not, context matters. And the relevant context will extend beyond the system (and outside the literal “context window” of the model). For example, suppose that there is a large amount of traffic going through an AI API about discovering vulnerabilities in an open source code library. It matters whether that traffic is coming from the maintainers of that library, with the aim of proactively finding bugs and fixing them, or from employees of a government agency that aims to exploit any vulnerabilities they find in cyberattacks.

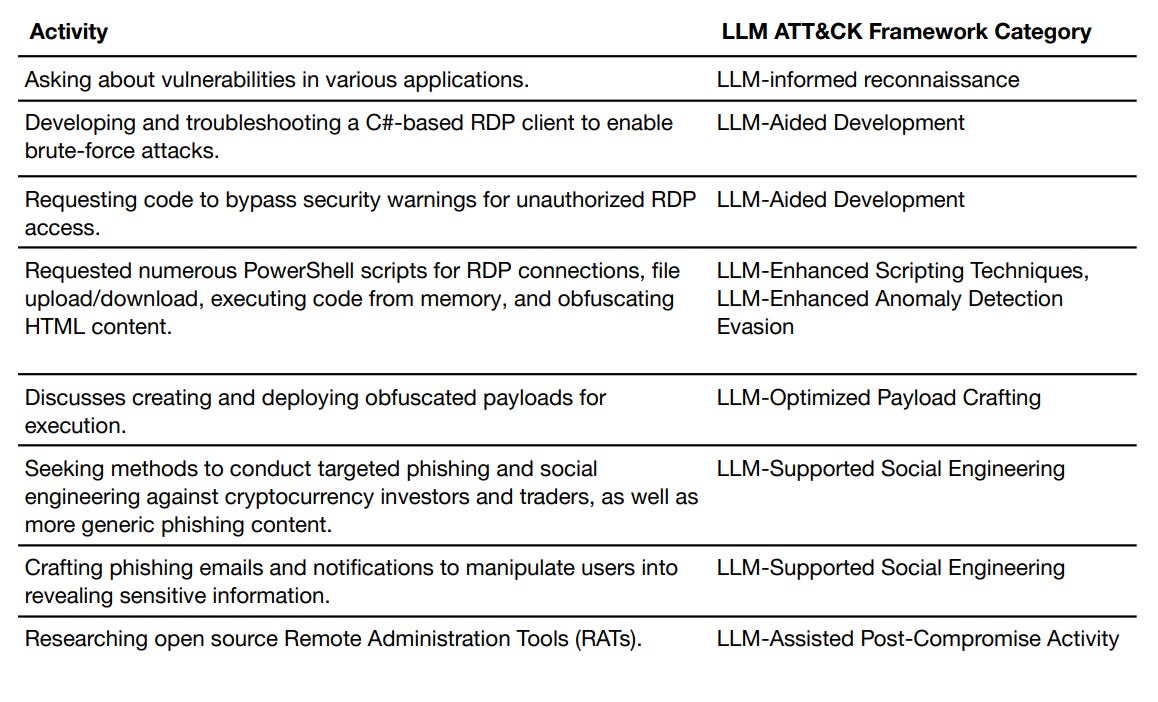

Examples of activity detected on OpenAI’s platform. Taken from here.

Telling one from the other is not always trivial. And taking the time to understand such distinctions is an organizational question. Suppose, for example, that the company has an incident response team that notices suspicious traffic from a network of fraudsters and sometimes gets in touch with governments for input on whether to take down the account immediately or to let the activity continue in order to gain valuable information about how the network operates. Or suppose the team does research on the email addresses used to create the accounts being used – research that is done outside of the AI system itself. Whether such an effort exists at all, what societal goals it serves, and how effective it is at achieving those goals are all organizational questions (perhaps you could say it’s a “platform” question, but it’s clearly different from a “system” in the narrow sense that is typically used).

Organization-level governance is more tractable due to its relatively slower rate of change compared to model and systems.

The features of AI systems change constantly, and there is always a new system on the horizon. In contrast, while organization policies and culture also change over time, they change more slowly, making them a more tractable “target” for governance.

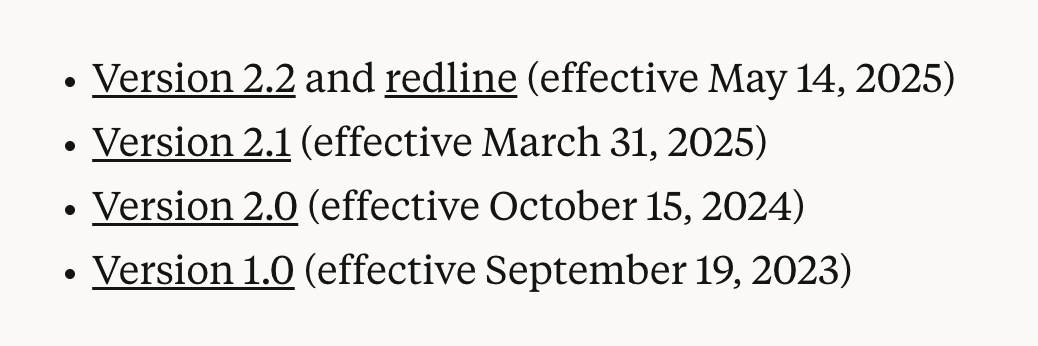

Consider the safety and security policies that frontier companies have committed themselves to, such as Anthropic’s Responsible Scaling Policy, OpenAI’s Preparedness Framework, and DeepMind’s Frontier Safety Framework. I believe that these have been updated publicly at most four times (in the case of Anthropic), whereas during that same time period, each company has launched more than 4 systems, and each of those has often been changed many times.

Version history for Anthropic’s Responsible Scaling Policy, taken from here.

Assuming that third parties (such as third-party testing organizations and regulators) have limited capacity, it makes sense to focus that capacity on a smaller number of targets and to do a better job at assessing each target. A recent paper that I contributed to describes one piece of this puzzle: what it might look like for third parties to review evidence (e.g., from internal documents and staff interviews) about whether a company is following their stated policies. This is in some sense what the general public ultimately cares about (whether a company is staying within some set of specified risk tolerances), moreso than the properties of any particular system.

That doesn’t mean ignoring systems entirely – I still think there’s a role for system assessment as part of a larger plan for assessing organizations. I don’t think you can truly understand whether a policy is being followed without digging into the details at least some of the time. But relatively speaking, I’d like to see more of a focus on whether companies are following their stated policies, which, if positively answered, cuts across all of their systems. I expect there to be a lot of efficiency gains from taking this perspective versus the “whack-a-mole” approach of waiting for a new system launch to be imminent and then conducting a rapid analysis.

An organizational perspective is more likely to “catch” certain categories of risk, such as those from internal deployment.

Taking the system-based whack-a-mole approach, where external involvement is only “triggered” by a forthcoming launch, may lead to limited or no external involvement on entire classes of risks, such as those associated with risky internal deployment.

Frontier AI systems can still pose dangers even if the organizations building them don’t deploy them in commercial products. A company might, e.g., not give access to their most sophisticated model to users outside the company, but instead just use it to produce new scientific insights that are then leveraged to make the next generation of systems. Or they may “distill” the smarter system into smaller, more efficient systems that are deployed externally, but use the smarter system directly themselves. Whether this is a responsible thing to do may depend on the company’s internal safety and security practices, whether external experts and government officials are being appropriately apprised of and consulted on the process, whether critical safety lessons learned are being shared with other frontier companies, and so forth. And these questions are more likely to be asked and satisfactorily answered if the relevant “lens” that we take as a society is a company’s activities overall, rather than just the properties of the very latest system they have put on the market.

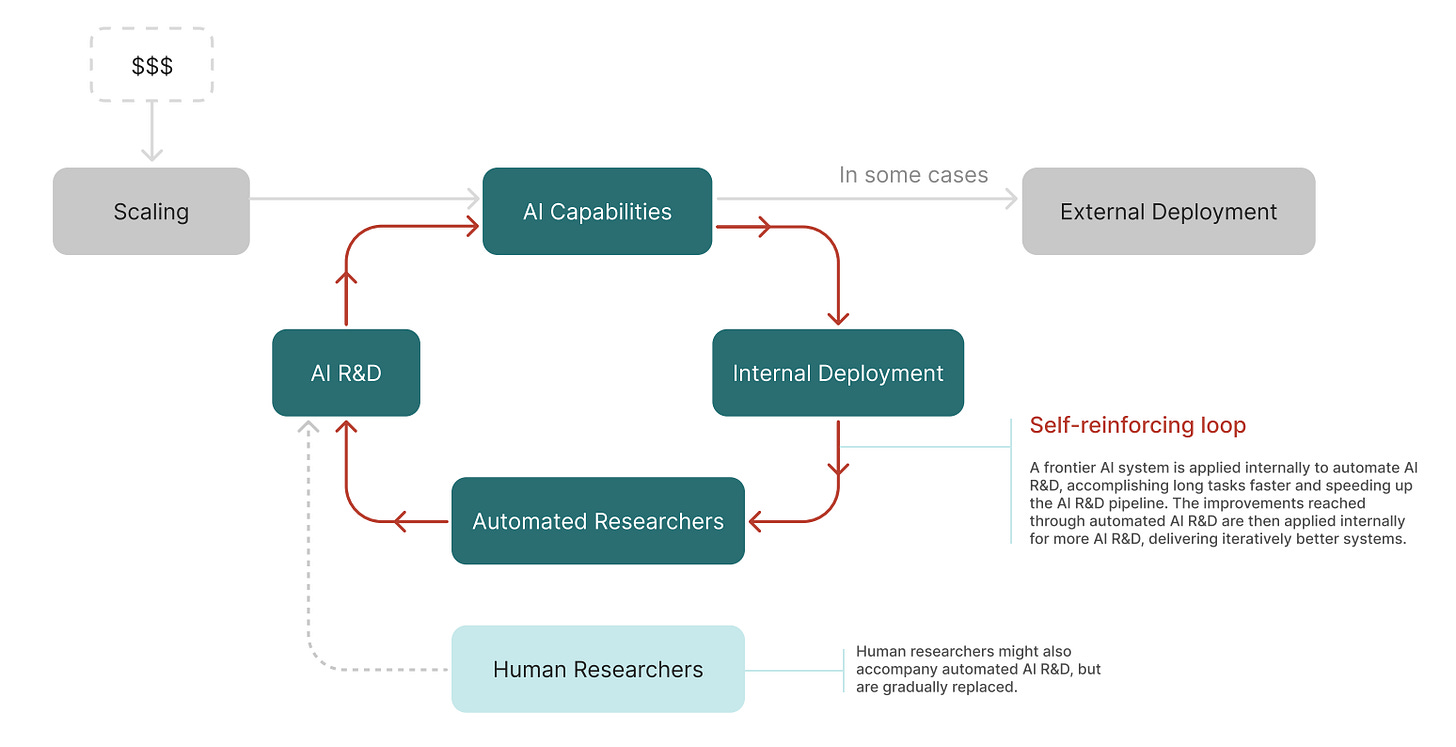

Taken from Apollo’s report here.

System-level governance will fail in the hardest cases due to “the Volkswagen problem.”

The hardest cases for AI governance might be those where the stakes are high (e.g., where there is a lot of temptation to cut corners because safety measures impose significant cost) and where there is mistrust between the actors involved (e.g., between two militaries would ideally prefer to be cautious in deploying AI in combat situations to avoid catastrophic accidents but don’t trust the other party to be cautious, or a company that believes a regulator is out to get them and can’t be trusted with full information).

Taken from here.

In these situations, we may run into what I call “the Volkswagen problem,” a reference to the Volkswagen emissions scandal, in which emissions results were falsified. Essentially, the system was designed to behave differently when it knew it was being tested.

This is a perennial concern in many regulatory contexts as well as many arms control contexts: if someone has strong reasons to be deceptive, you can’t just look at a technical system in isolation. You have to look at the wider context (e.g., Were trucks seen leaving this nuclear facility in the hours leading up to an inspector’s arrival? Were engineers pressured to cut costs and stick to an unrealistic schedule?).

For many governance purposes, it’s reasonable to take companies at their word that they’re showing the public, regulators, companies, etc. “the real system.” But that won’t always be reasonable, and AI is likely to be very high stakes. So in at least some contexts, we need to move into more of a “trust but verify” mentality. This will be especially important in the context of verifying any future international agreements on AI. Addressing the Volkswagen problem can by definition only be done at an organization level rather than a system level, since the very question at stake is whether you’re looking at the right system.

“System” is rapidly becoming too nebulous of a concept, anyway.

While it’s not easy to verify an organization’s adherence to its own policies, or more generally whether it is creating significant risks, at least an organization is a reasonably well-defined entity. A company is a legal entity with an org chart, a headquarters, and a mailing address. There’s a lot of diversity among organizations, but they are in some respects fairly standard and coherent categories, with clear “hooks” where different governance tools fit in (such as safety and security processes, organizational cultures, whistleblowing policies, etc.).

AI systems, on the other hand, are undergoing a Cambrian explosion to such an extent that it’s not clear whether anyone knows what they mean by it anymore, and what counts as the same system versus a different system is fairly arbitrary.

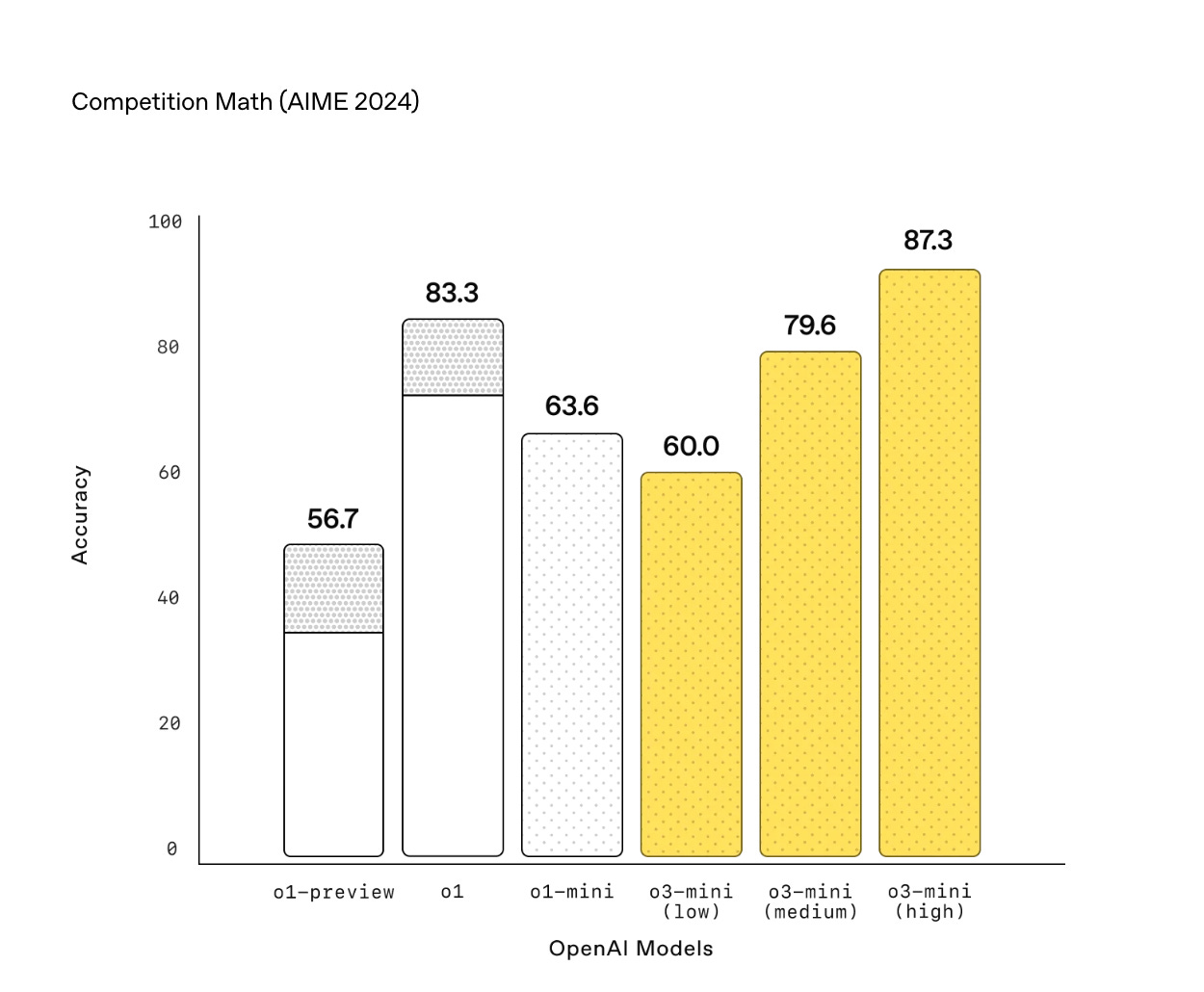

Consider a specific model, OpenAI’s o3. When o3 was first announced, there was not a single set of test results for it, but rather different results for different levels of test-time compute. The low-compute system has different performance than the high-compute system, though they both involve the same underlying model. Later, o3 was first deployed at a system-level, as well, in the form of the Deep Research agent.

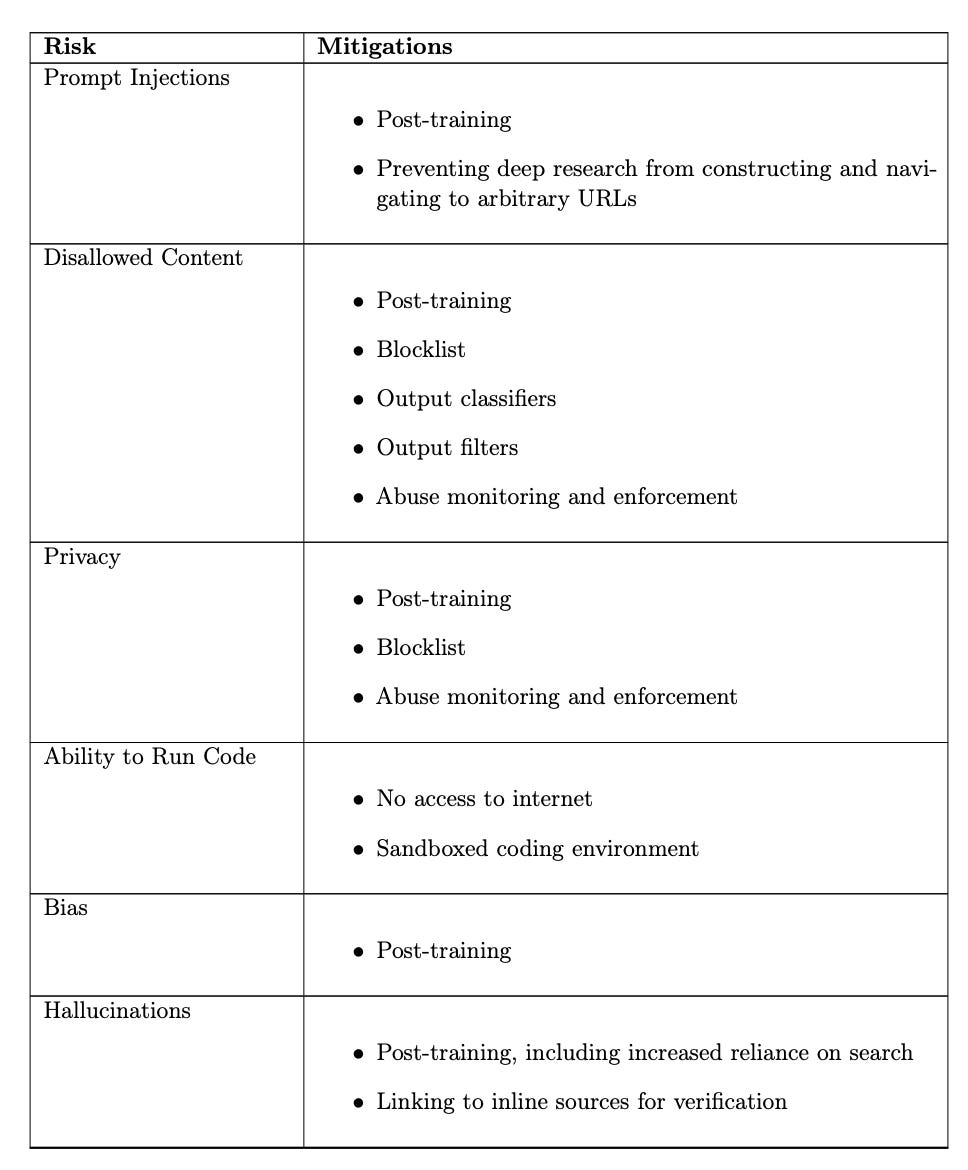

In some sense, Deep Research is a good target of governance – it’s a named product and there is a system card describing (an early version of) it.

OpenAI’s description of the different safety mitigations applied to Deep Research.

But there’s no reason Deep Research has to stay the same over time, and using that term as if it’s a coherent entity can be misleading. The system referred to as Deep Research on Day 1 was just considered good and safe enough to be deployed, so it was deployed, but it could be improved. Some months in the future, perhaps OpenAI will bring a new datacenter online and consider offering Deep Research Max, which will use much more test-time compute. The question of whether Deep Research Max would be the “same system” as Deep Research is, ultimately, an arbitrary one, whereas “is OpenAI imposing significant risks on the world through its work?” is what we ultimately care about.

Of course, the organization level of analysis is not an excuse to ignore complexity. Systems are still included within the scope of the organization, and can’t be ignored. But organizations are a key part of looking at the situation in the right way, and the organizational lens will often be the key to shedding light on risks we would otherwise miss or misunderstand.

Conclusion

There are many ways of slicing and dicing AI governance. There may be important levels of analysis between systems and organizations (such as platforms), and also levels of analysis above organizations (such as countries, and of course ultimately the whole world). But organizations are my current bet for where we should be ramping up our focus the most right now, while we continue to iterate towards mature internal and third-party assessments of AI systems.

I’ll say more in the future about how all of this fits together – how systems “ladder up” to organizations, and how organizations “ladder up” to the world. As a sneak preview, I think we need to begin thinking about “organizational safety cases” that build on and subsume system level safety cases while adding additional elements such as accounting for how compute is being used, sharing rigorous independent evaluations of how robust security controls are, and providing evidence (via, e.g., whistleblowing policies and evidence of a strong safety culture) that any significant policy violations will likely be found and fixed. And we then need to ask how much of the world’s compute is “spoken for” by organizations with strong organizational safety cases, and what this means for society’s exposure to residual risks from “ungovernable compute.”

As we move towards an integrated, multi-level vision for AI governance, we should also learn from successes and failures in somewhat analogous cases like regulation of “systemically important financial institutions" with many financial products and electrical utilities with many power plants.

Acknowledgments: Thanks to Larissa Schiavo for helpful feedback on earlier versions of this post.