I want my Substack to be a pretty self-contained resource for anyone who wants to follow along with my research. In the past few months, I’ve published a few things that haven’t yet been mentioned here or were only mentioned in passing, so this post will quickly bring you up to date.

This will be my last blog post until my non-profit launches, so hopefully this batch of reading material can tide you over until then. In case you missed it, there’s a bit of a sneak preview of my non-profit in this blog post.

First, I contributed to two very closely related papers on the nuts and bolts of verifying international agreements on AI or rules related to AI:

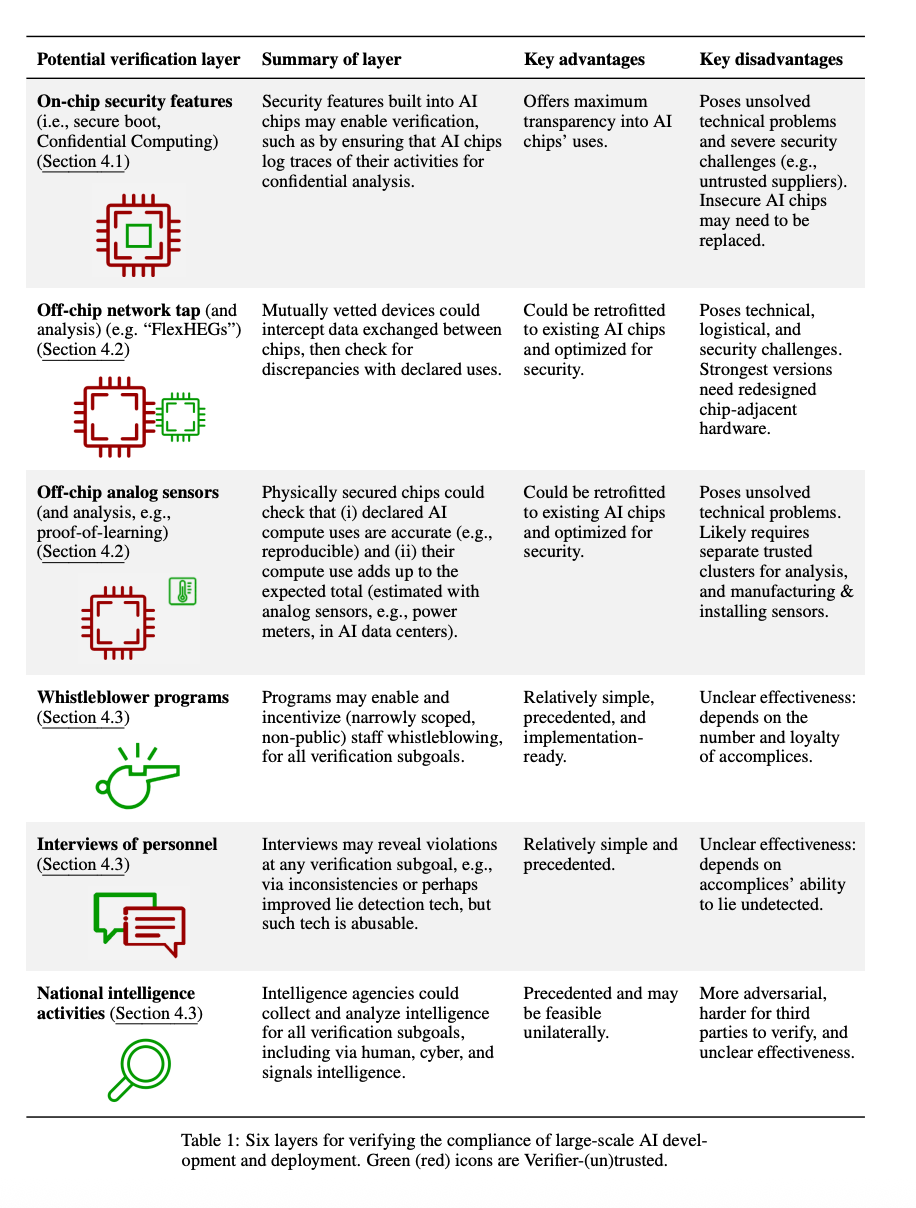

“Verifying International Agreements on AI: Six Layers of Verification for Rules on Large-Scale AI Development and Deployment.” Mauricio Baker, Gabriel Kulp, Oliver Marks, Miles Brundage, and Lennart Heim. RAND working paper.

“Verification for International AI Governance.” Ben Harack, Robert F. Trager, Anka Reuel, David Manheim, Miles Brundage, Onni Aarne, Aaron Scher, Yanliang Pan, Jenny Xiao, Kristy Loke, Sumaya Nur Adan, Guillem Bas, Nicholas A. Caputo, Julia C. Morse, Janvi Ahuja, Isabella Duan, Janet Egan, Ben Bucknall, Brianna Rosen, Renan Araujo, Vincent Boulanin, Ranjit Lall, Fazl Barez, Sanaa Alvira, Corin Katzke, Ahmad Atamli, and Amro Awad. Oxford Martin AI Governance Initiative research paper.

A figure from the first paper outlines six different “layers” of AI verification.

This kind of international verification — which is closely related to my current work on “frontier AI auditing” — is quite important to understand and prepare for. Companies and countries are tempted to cut corners by default and would benefit from some rules of the road, at least if they’re sure others will follow those rules. The overall conclusion across these papers is that solving this issue is tricky but not impossible, and there should be more investment in building the technologies needed to do it.

It was a privilege to get to “play around” in both of these big projects, which came from pretty different “camps” (a U.S.-government-affiliated/oriented think tank in the first case and academics from a wide range of institutions/countries in the second case).

Second, just this morning, RAND and Perry World House published an edited volume that I contributed to, entitled “Artificial General Intelligence Race and International Security.” My paper in this volume helps explain why I think the topic discussed above (verification) is important.

The volume brings together researchers with a wide range of backgrounds and perspectives, and in each case the paper authors were asked to be as specific as possible regarding the (typically implicit!) assumptions they make about the time until certain AI capabilities might be developed, the extent of visibility different countries will have into each other’s progress, etc.

My paper in this volume is called “Unbridled AI Competition Invites Disaster.” Not surprisingly to people who follow my work, the perspective I outline is very bullish on AI capabilities and very concerned about the risks which may arise by default, but also hopeful that, through foresight and vigorous action, we can mitigate the worst of these risks.

Third, I contributed to a paper on the idea of “process compliance” reviews in frontier AI:

“Third-party compliance reviews for frontier AI safety frameworks.” Aidan Homewood, Sophie Williams, Noemi Dreksler, John Lidiard, Malcolm Murray, Lennart Heim, Marta Ziosi, Seán Ó hÉigeartaigh, Michael Chen, Kevin Wei, Christoph Winter, Miles Brundage, Ben Garfinkel, and Jonas Schuett. arXiv preprint.

The context here is that various frontier AI companies have — and increasingly, are required to have — “frontier AI safety frameworks” that describe various commitments they have made. But — and this is increasingly important as AI gets more capable — it’s hard to tell if they’re actually following them, and how rigorously. This is again very related to the themes in the papers above, in that process compliance is one of the things you might want to audit/verify, in addition to specific properties of a given AI system.

As I’ve discussed elsewhere, it’s important to think holistically about AI safety and security, and process compliance is part of that bigger picture. I was glad to be included on this collaboration, which brought together experts in various aspects of AI, auditing, law, etc. to make progress on an important problem. This paper is a great example of something that I think we need more of, which is synthesizing what we’re learning “on the ground” in AI with best practices that have already been crystallized in other domains. We don’t have time to figure out everything from scratch, and there are many similarities between AI and other domains.

Finally — and now for something that is actually not about auditing! — I contributed a proposal to the Institute for Progress’s “Launch Sequence,” which they describe as “a collection of essays, written by expert authors, describing concrete but ambitious AI projects to accelerate progress in science and security.”

My specific proposal is about using AI to accelerate cyberdefense. This is one of many areas where I think we should be doing much more in order to ensure that we actually “maximize the benefits of AI,” and in this case, maximizing benefits and minimizing risks go hand in hand: applying AI to cyberdefense will help us defend against AI that is leveraged for cyberoffense. If we do this right, we can do more than just prevent AI from making things worse — we can make things much better.

In “Operation Patchlight,” I describe two prongs of a vision for using AI to speed up cyberdefense:

Fix: Use cutting-edge AI to find and fix vulnerabilities in open-source code before bad actors can.

Empower: Fund the development and continuous improvement of AI-powered tools that help critical infrastructure defenders work more effectively.

The larger collection has a lot of great ideas in it, including some others that are related to the intersection of AI and cybersecurity.

While we’re on the subject of this blog post being or not being a one-stop shop, note that I don’t generally post links to podcasts, media interviews, etc. on here. Also, sometimes I share miscellaneous takes on AI policy on Twitter that aren’t worth the time to write up in a Substack post. And there’s a lot of older research stuff that I haven’t mentioned on my Substack (see also my Google Scholar page).

So for now, if you want to be totally up-to-date on all things Miles, you’ll have to follow my Twitter account and tolerate a fair amount of bad jokes and random TV/movie recommendations. Sorry! Maybe I’ll get an AI to generate and maintain a truly comprehensive resource at some point…